TeamDrive Web Portal Administration¶

Disabling the Apache Access Log¶

In the default setup, Apache is used as a reverse proxy to route all calls

from the TeamDrive browser App to the Docker containers. This can

generate a large number of requests so there is no point in keeping the

normal access log activated. We therefore recommend deactivating it in

a production environment. Only the error log should be left

enabled. To facilitate this, comment out the following line in the default

httpd.conf:

# CustomLog logs/access_log combined

If problems occur, logging can be activated for a specific user (see http://httpd.apache.org/docs/2.2/mod/mod_log_config.html). e.g. all access to TeamDrive Agent using port 49153 will be logged (the required Apache logging module needs to be enabled again):

SetEnvIf Request_URI 49153 agent-49153

CustomLog logs/agent-49153-requests.log common env=agent-49153

Restart the Apache instance and check the log files for errors.

You can discover the port used by an agent by using the command:

[root@webportal ~]# docker ps -a | grep <username>

The port used will be in the 6th column of the output which has the form:

0.0.0.0:<agent-port>->4040/tcp, e.g. 0.0.0.0:49153->4040/tcp.

Changing an Admin User’s Password¶

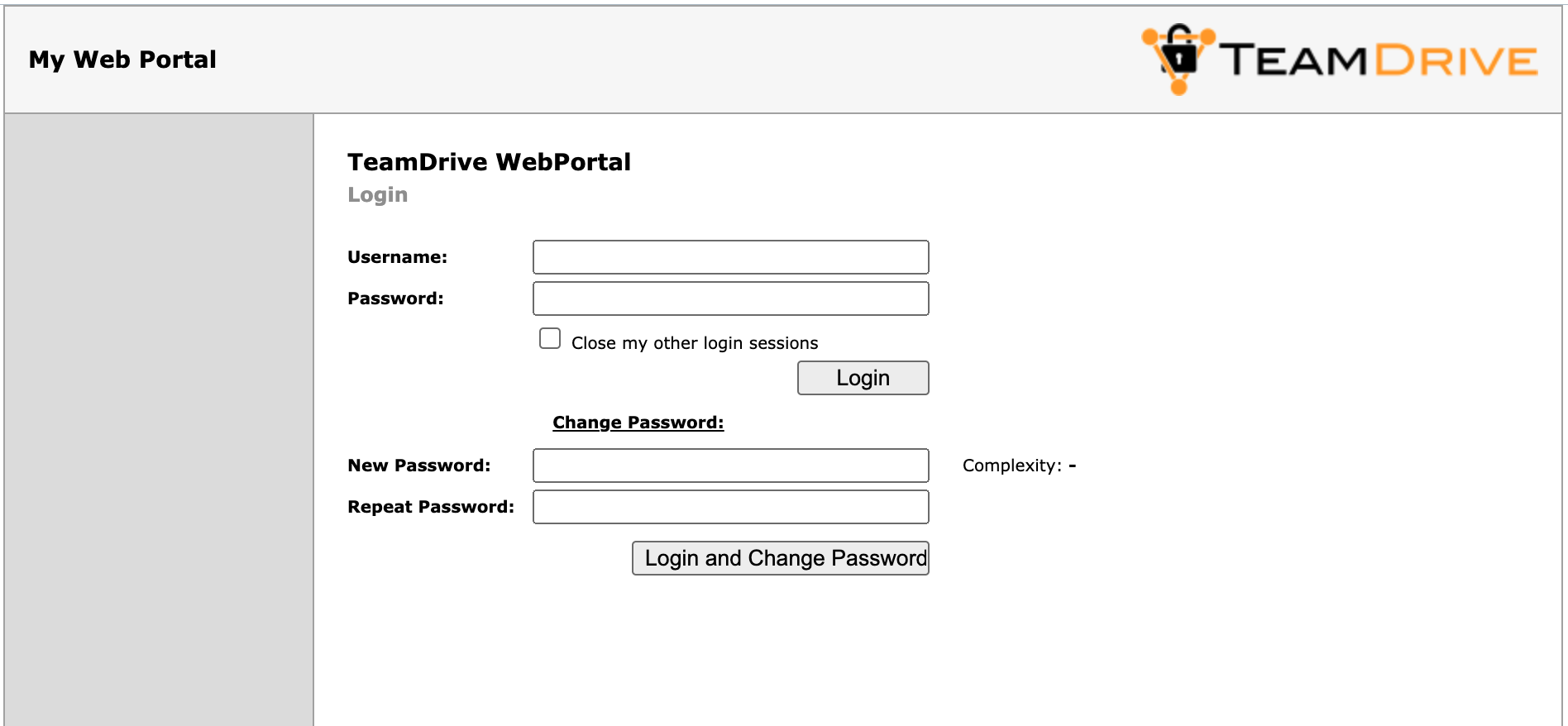

The Web Portal Administration Console can be accessed by all Admin Users by entering the correct username and password.

An existing user with administrative privileges can change his password directly via the Administration Console’s login page or via the Admin Users page of the Administration Console.

On the login page, click on Change Password... to enable two input fields New Password and Repeat Password that allow you to enter the new password twice (to ensure you did not mistype it by accident). You also need to enter your username in the Username field and the current password in the Password: field above. Click Login and Change Password to apply the new password and log in.

Web Portal Administration Console: Change Password

You can also change your password while being logged into the Administration Console. If your user account has “Superuser” privileges, you can change the password of any admin user, not just your own one.

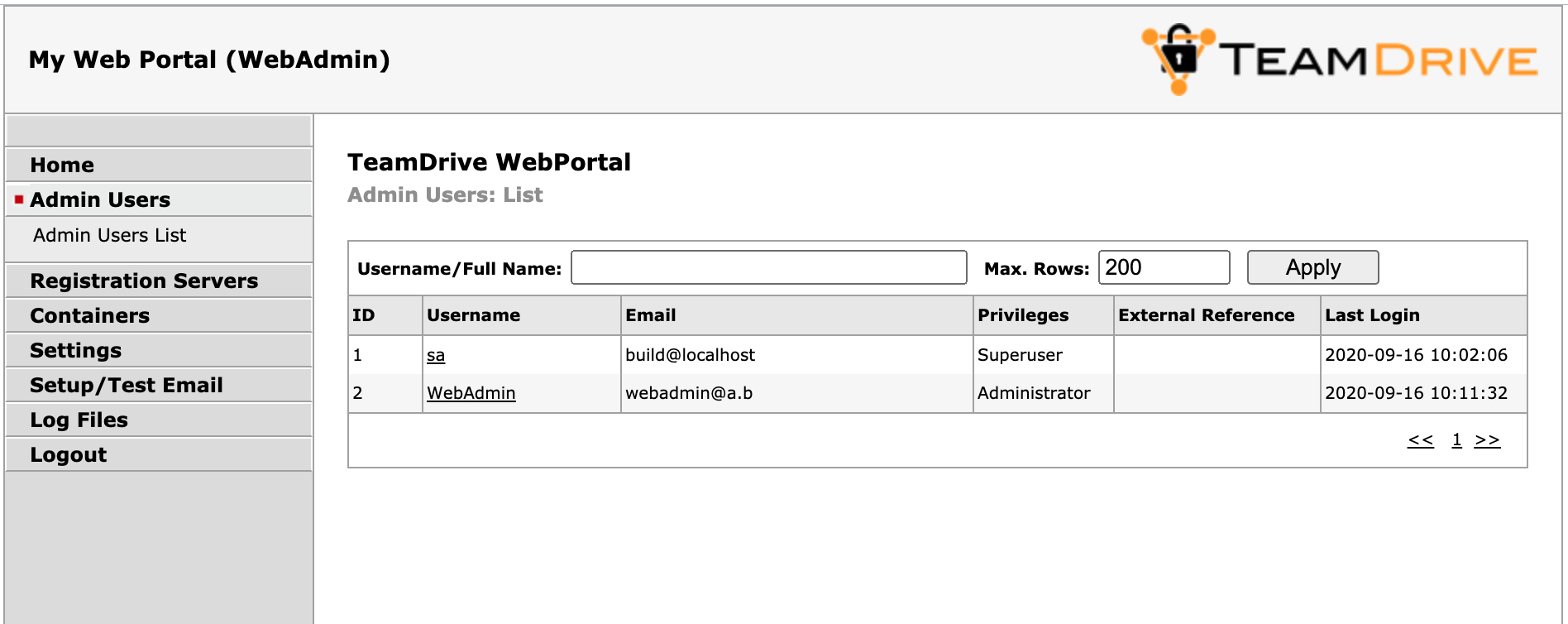

Click User List to open the user administration page.

The page will list all existing user accounts and their details.

Web Portal Administration Console: Admin Users List

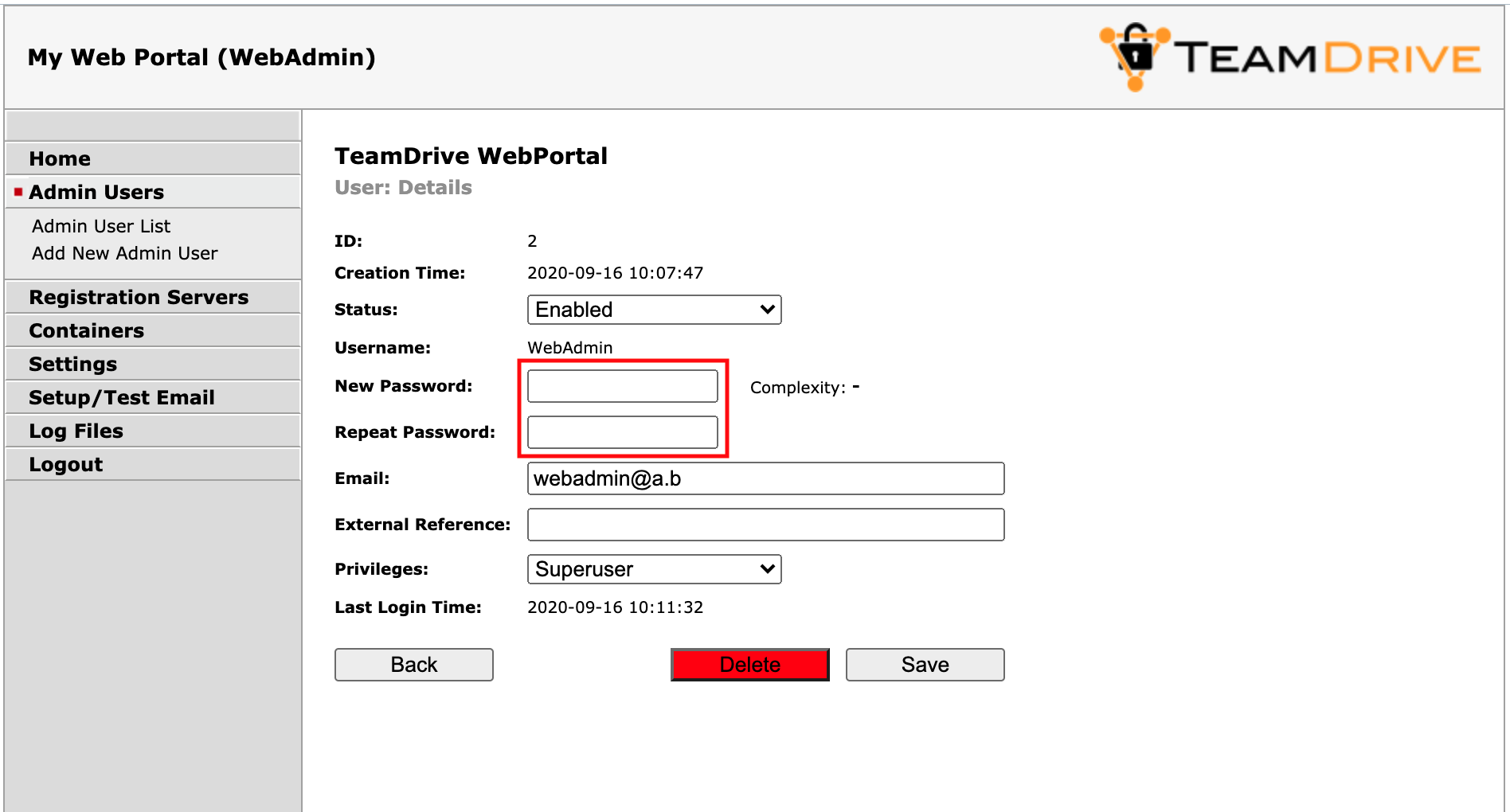

Click the username of the account you want to modify. This will bring up the user’s details page.

Web Portal Administration Console: User Details

To change the password, enter the new password into the input fields New Password and Repeat Password and click Save to commit the change.

The new password will be required the next time this user logs into the Administration Console.

In case you lost or forgot the password for the last user with Superuser

privileges (e.g. the default HostAdmin user), you need to reset the

password by removing the current hashed password stored in the MySQL Database

(Column Password, located in Table webportal.WP_Admin). This can be

performed using the following SQL query.

Log into the MySQL database using the teamdrive user and the corresponding

database password:

[root@webportal ~]# mysql -u teamdrive -p

Enter password:

[...]

mysql> use webportal;

Database changed

mysql> SELECT * FROM WP_Admin WHERE UserName='WebAdmin'\G

*************************** 1. row ***************************

ID: 1

Status: 0

UserName: WebAdmin

Email: root@localhost

Password: $2y$10$JIhziNetygYCeIXU3gXveue2BTqwCs4vwA6LHNUKZVt8V.U8jtkcW

ExtReference: NULL

Privileges: Superuser

CreationTime: 2015-08-10 11:26:10

LastLoginTime: 2015-08-10 11:53:06

1 row in set (0.00 sec)

mysql> UPDATE WP_Admin SET Password='' WHERE UserName='HostAdmin';

Query OK, 1 row affected (0.01 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> quit

Bye

Now you can enter a new password for the HostAdmin user via the login page

as outlined above, by clicking the Change Password link, but leaving the

Password field empty and only entering the new password twice, followed by

clicking the Login and Change Password button.

Configure proxy for outgoing connections for docker and the containers¶

To use a proxy for outgoing connections you have configure 3 parts:

docker: As described in the documentation:

https://docs.docker.com/config/daemon/systemd/#httphttps-proxy

add an:

Environment="HTTP_PROXY=http://proxy.example.com:80/"

in the already existing config file:

/etc/systemd/system/docker.service.d/startup_options.conf

container: Use the custom build functionality as described in Creating a Customised Agent Docker Image to set the proxy within the container. Modify the setting

BuildDockerfileand add the following line before the “yum -y update”-call:RUN echo "proxy=http://proxy.example.com:80/" >> /etc/yum.conf

In case that your network only supports IPv4 add also this line:

RUN echo "ip_resolve=4" >> /etc/yum.conf

teamdrive: Add the following option to the

ClientSettingssetting (teamdrive agent 4.6.11 build 2640 or newer required):http-proxy=http://proxy.example.com:80/

After this you may need to rebuild the container immage depending on your setup, see ClientSettings for details.

How to Enable Two-Factor Authentication¶

Two-factor authentication (2FA) can be enabled at two different areas:

- 2FA for the Web Portal Administrators

- 2FA for the users of the Web Portal

How to enable two-factor authentication for administrators is described in the section below (Enabling Two-Factor Authentication for Administrators).

Two-factor authentication (2FA) for the Web Portal users requires the Registration Server version 3.6 or later. 2FA is implemented by the Registration Server using the Google Authenticator App (https://support.google.com/accounts/answer/1066447?hl=en).

To enable 2FA for users, set AuthServiceEnabled to True, and leave

the associated settings: AuthLoginPageURL, AuthTokenVerifyURL and

RegistrationURL blank.

As described in Web Portal Settings, these settings default to login and registration pages provided by the Web Portal. The Web Portal pages redirect to the associated pages provided by the Registration Server.

On the Registration Server the pages, can be optionally customised using the

template system. The templates to be modified are: portal-login,

portal-lost-pwd, portal-register, portal-activate, portal-login-ok,

portal-goog-auth-setup, portal-goog-auth-login, and

portal-goog-auth-login-ok.

If you would like to allow users to register directly via the Web Portal, then

set RegistrationEnabled to True.

In order to setup two-factor authentication, users must be directed to the page:

https://webportal.yourdomain.com/portal/setup-2fa.html

This page provides instructions of how to configure Google Authentication for the user’s account.

Note

Please check the apache ssl.conf for the additional RewriteRule in case you updated from WebPortal 1.0.5 to a newer version:

RewriteRule ^/portal(.*)$ /yvva/portal$1 [PT]

See Configure mod_ssl for details.

On the Registration Server you must add the domain name of the Web Portal (as

specified by WebPortalDomain) to Provider setting API_WEB_PORTAL_IP.

Modify this setting by adding the domain name on a line beneath the IP Address

of the Web Portal which you have already set (as described in

Associating the Web Portal with a Provider).

If the Web Portal is used by several Providers, only modify the

API_WEB_PORTAL_IP setting of one of the Providers. This will be the

default Provider for users that register directly via the Web Portal.

Enabling Two-Factor Authentication for Administrators¶

The Web Portal Administration Console supports two-factor authentication via email. In this mode, an administrator with “Superuser” privileges that logs-in with his username and password must provide an additional authentication code that will be sent to him via email during the login process. This feature is disabled by default.

The TeamDrive Web Portal needs to be configured to send out these authentication email messages via SMTP. The Web Portal is only capable of sending out email using plain SMTP via TCP port 25 to a local or remote MTA.

If your remote MTA requires some form of encryption or authentication, you need to set up a local MTA that acts as a relay. See chapter Installing the Postfix MTA in the TeamDrive Web Portal Installation Guide for details.

Before you can enable two-factor authentication, you need to set up and verify the Web Portal’s email configuration. This can be accomplished via the Host Server’s Administration Console. You need to log in with a user account having “Superuser” privileges in order to conclude this step.

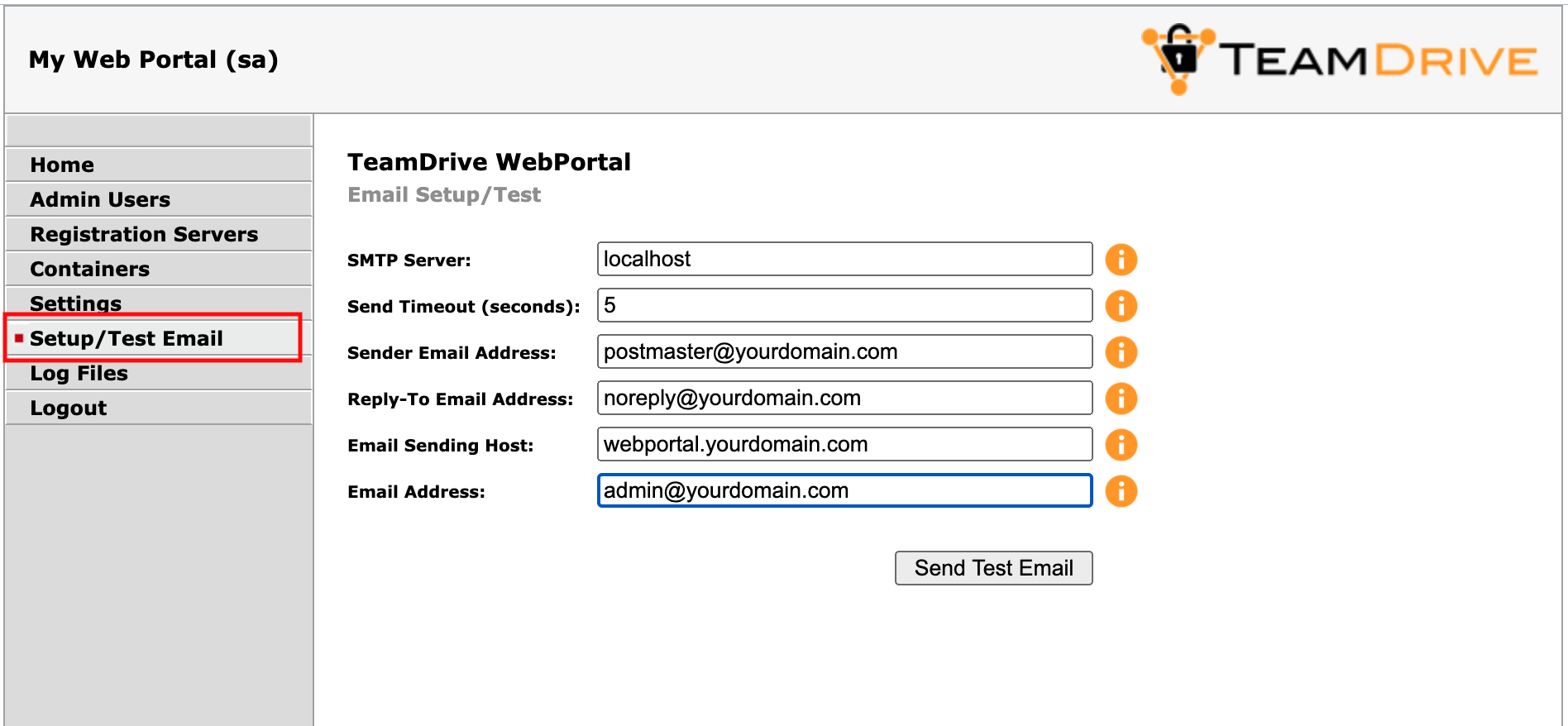

Click Setup / Test Email to open the server’s email configuration page.

Web Portal Admin Console: Email Setup / Test

Fill out the fields to match your local environment:

- SMTP Server:

- The host name of the SMTP server accepting outgoing email via plain SMTP.

Choose

localhostif you have set up a local relay server. - Send Timout:

- The timeout (in seconds) that the mail sending code should wait for a delivery confirmation from the remote MTA.

- Sender Email Address:

- The email address used as the Sender email address during the SMTP delivery,

e.g.

postmaster@yourdomain.com. This address is also known as the “envelope address” and must be a valid email address that can accept SMTP-related messages (e.g. bounce messages). - Reply-To Email Address:

- The email address used as the “From:” header in outgoing email messages.

Depending on your requirements, this can simply be a “noreply” address, or

an email address for your ticket system, e.g.

support@yourdomain.com. - Email Sending Host:

- The host name used in the HELO SMTP command, usually your Web Portal’s fully qualified domain name.

- Email Address:

- The primary administrator’s email address. This address is the default recipient for all emails that don’t have an explicit receiving address. During the email setup process, a confirmation email will be sent to this address.

After you’ve entered the appropriate values, click Send Test Email to

verify the email setup. If there is any communication error with the

configured MTA, an error message will be printed. Check your configuration and

the MTA’s log files (e.g. /var/log/maillog of the local Postfix instance)

for hints.

If the configuration is correct and functional, a confirmation email will be delivered to the email address you provided. It contains an URL that you need to click in order to commit your configuration changes. After clicking the URL, you will see a web page that confirms your changes.

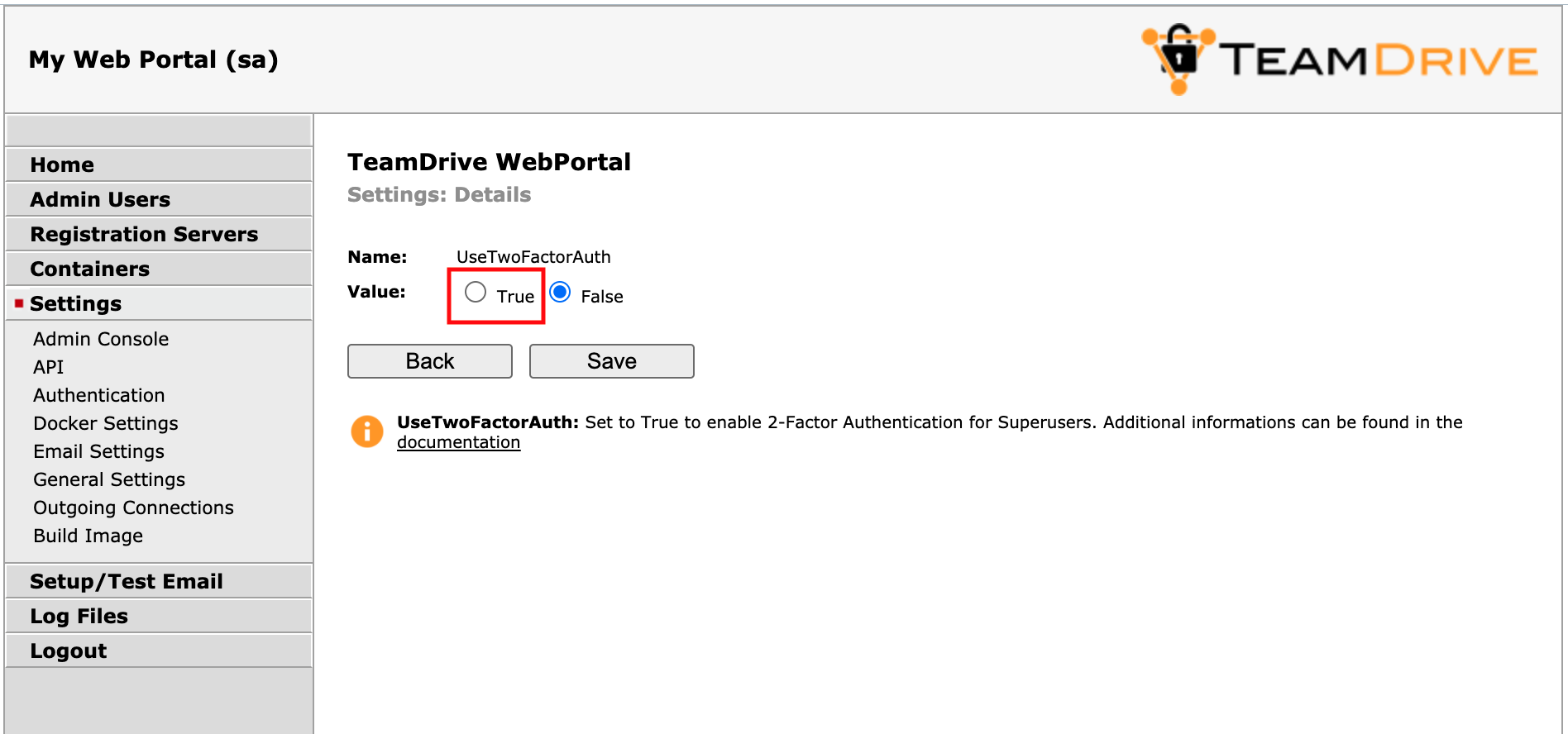

This concludes the basic email configuration of the Web Portal. Now you can

enable the two-factor authentication by clicking Settings ->

UseTwoFactorAuth. Change the setting’s value from False to True

and click Save to apply the modification.

Web Portal Admin Console: Use Two-Factor Authentication

Now two-factor authentication for the Administration Console has been enabled.

The next time you log in as a user with “Superuser” privileges, entering the username and password will ask you to enter a random secret code, which will be sent to you via email to the email address associated with your administrator account. Enter the code provdided into the input field Authentication Code to conclude the login process.

Changing the MySQL Database Connection Information¶

The Web Portal Apache module mod_yvva as well as the

yvvad daemon that performs the td-webportal background tasks need to

be able to communicate with the MySQL management database of the Web Portal.

If you want to change the password of the teamdrive user or move the MySQL

database to a different host, the following changes need to be performed.

To change the MySQL login credentials, edit the file

/etc/td-webportal.my.cnf. The password for the teamdrive MySQL user

in the [tdweb] option group must match the one you defined earlier:

[tdweb]

database=webportal

user=teamdrive

password=<password>

host=127.0.0.1

If the MySQL database is located on a different host, make sure to modify the

host variable as well, providing the host name or IP address of the host

that provides the MySQL service. If required, the TCP port can be changed from

the default port (3306) to any other value by adding a port=<port> option.

Configuring Active Directory / LDAP Authentication Services¶

The Web Portal supports login using an External Authentication Service, for example Microsoft AD or LDAP.

Note

This section refers to the login of the TeamDrive users as apposed to the administrators of the Web Portal, which is described in the section: Administrator Login using External Authentication below.

Whether to use such a service is automatically determined depending on the username or email address entered during login. This is assuming you are using the TeamDrive Agent version 4.6.11.2656 or later.

Unlike the TeamDrive client, or standalone TeamDrive Agent, you cannot login

to the Web Portal without an existing user account. Registration is possible

if you provide a “registration URL”, by setting RegistrationURL.

External authentication is used if a user belongs to a Registration Server

provider that has enabled external authentication by setting USE_AUTH_SERVICE

to True, and by specifying AUTH_LOGIN_URL and VERIFY_AUTH_TOKEN_URL

URLs. Alternatively the email of the user is associated with named external

authentication service. This is explained in how to manager domains and

services on the Registration Server 4.5.

Please refer to Configuring External Authentication using Microsoft Active Directory / LDAP in the TeamDrive Registration Server Administration Guide for details of how to setup an External authentication service. In this document we describe only the aspects that are relevant to the Web Portal.

Exclusive use of a particular external authentication service is still

supported by the Web Portal. This is activated by setting AuthServiceEnabled

to True. When set to True, the Web Portal will immediately redirect

users to the the external service login page as specified by the

AuthLoginPageURL setting (see also AuthTokenVerifyURL). This

limits the Web Portal login to only users of this external service, and is

mostly supported for backwards compatibility with versions of the Web Portal

before version 2.0.5.

In order for for a Web Portal to access a external authentication service

you must register the domain of the Web Portal with the external service.

This is done by adding the domain of the portal domain to the $allowed_origins

configuration parameter of the external service. For example:

$allowed_origins = array(

"localhost:45454",

"127.0.0.1:45454",

"shop.domain.com",

"webportal.domain.com");

Where webportal.domain.com is the domain of the Web Portal, as specified

by the WebPortalDomain setting.

Note that in older versions of the external authentication service

(Registration Server 3.6), the configuration parameter $webportal_domain

was used in place of $allowed_origins. This implementation was restricted

to the support of one login origin (for example one Web Portal), and should

by upgraded to support multiple sources.

The external login page can either be embedded in the TeamDrive Agent GUI,

or use the entire browser window. You can specify using embedded mode by

setting UseEmbeddedLogin to True. In this case, the external login

page will be shown embedded in an iFrame in the agent GUI.

However, not all external authentication service support embedding in a iFrame

for security reasons (for example Microsoft Azure). The UseEmbeddedLogin

setting refers to all authentication services used by the Web Portal, so if

one of them does not support iFrames, then you need to set UseEmbeddedLogin

to False (which is the default).

Administrator Login using External Authentication¶

The Administration Console of the Web Portal may use External Authentication such as LDAP or Active Directory. If the administrators of the Web Portal are stored and managed by such a service then it is possible to have the user credentials checked by the server, rather than stored and checked by the Web Portal database.

There are two system settings that control this behaviour: ExtAuthEnabled

and ExtAuthURL. ExtAuthEnabled must be set to True. ExtAuthURL

specifies a URL that will verify the external authentication.

On login, if external authentication is enabled, the Web Portal will

perform a HTTP POST to the URL specified by ExtAuthURL, passing two

parameters: username and password. The page is expected to return

an XML reply of the following form:

<?xml version='1.0' encoding='UTF-8'?>

<teamdrive>

<user>

<id>unique-user-id</id>

<email>users-email-address</email>

</user>

</teamdrive>

If an error occurs, for example an “Incorrect login”, then the ExtAuthURL page must return:

<?xml version='1.0' encoding='UTF-8'?>

<teamdrive>

<error>

<message>error-message-here</message>

</error>

</teamdrive>

Such a page can be easily implemented in PHP, for instance. An example

implementation of the ExtAuthURL page for LDAP and Active Directory

is available upon request from TeamDrive Systems (please contact

sales@teamdrive.com).

Web Portal Backup Considerations¶

The extent to which backup and failover is performed depends entirely on the service level you wish to provide.

In order to secure the configuration of the Web Portal, you must

make a backup of the webportal MySQL database. Loss of the

database will require a complete re-install of the Web Portal.

Quick recovery from failure of the Web Portal can be provided

by replicating the webportal database to a standby machine.

You should also ensure that you have a backup of all the configuration files describe here: List of relevant configuration files. However, these files are rarely changed after the initial setup.

A standby Docker host is also recommended if a high level of

availability is required. If the contents of the ContainerRoot

is lost due to disk failure, or failure of the Docker host,

users will have to re-enter their Spaces after they log

into the Web Portal again. The only data that will be lost

in this case are files that were being uploaded when the

failure occurred, All other Space data is stored by the

TeamDrive Hosting Service, and can be recovered from there.

In order to ensure a high level of availability, a standby

Docker host may be used, and the contents of the

ContainerRoot path can be copied to the standby system

using rsync. Alternatives depend on the type of volume mounted

at ContainerRoot. If the file system has sufficient redundancy

and can be mounted by the standby system at any time, Then

no further consideration are required.

Note that it is not necessary to make a backup of Docker containers, as these are automatically re-created when a user logs in.

Setting up Server Monitoring¶

It’s highly recommended to set up some kind of system monitoring, to receive notifications in case of any critical conditions or failures.

Since the TeamDrive Web Portal is based on standard Linux components like the Apache HTTP Server and the MySQL database, almost any system monitoring solution can be used to monitor the health of these services.

We recommend using Nagios or a derivative like Icinga or Centreon. Other well-established monitoring systems like Zabbix or Munin will also work. Most of these offer standard checks to monitor CPU usage, memory utilization, disk space and other critical server parameters.

In addition to these basic system parameters, the existence and operational status of the following services/processes should be monitored:

- The MySQL Server (system process

mysqld) is up and running and answering to SQL queries - The Apache HTTP Server (

httpd) is up and running and answering to http requests (this can be verified by accessing https://webportal.yourdomain.com/index.html and https://webportal.yourdomain.com/admin/index.html) - The

td-webportalservice is up and running (process nameyvvad)

Scaling a TeamDrive Web Portal Setup¶

When scaling the TeamDrive Web Portal we consider each component individually. There are four components that are relevant to this discussion: the Apache Web Server, the Docker host, the MySQL Database and the Load Balancer.

The simplest configuration places all components on one machine. This is the case which is largely described in this document. In this case, the Apache Web Server also fulfills the function of the Load Balancer. This is done by re-write rules which direct calls from the Web client to The associated Docker container.

Even in the case of a small scale setup, we recommend placing the Docker host on a separate system. This makes it easier to manage the resources required by Docker and the TeamDrive Agent running in the containers.

Apache Web Server¶

The Apache Web Server host is responsible for the management of the Web Portal. This includes: the Login page, the Administration Console and the background tasks.

The scaling requirements of this component are relatively limited as the task do not require much resources in terms of CPU, memory or disk space.

This means that a “scale-up” of the Apache Web Server host is probably quite sufficient to cope with a growing number of users.

Nevertheless, if the Web Portal access patterns require it, or simply to add redundancy it is possible to scale-out the Apache Web Server, by adding additional machines that run the identical Web Portal software.

In this case a Load Balancer is required to distribute requests to the various Apache hosts. This can be done on a simple round-robin basis or according to current load since the connections are stateless.

The Web Portal service which runs the various background task should be started on all Apache hosts.

The MySQL Database must also be moved to a separate system. See below for more details.

MySQL Database¶

Load on the database, and the volume of data is minimal on the Web Portal. For this reason, it should suffice to place the MySQL database on a dedicated server as the load increases on the Web Portal. Additional CPU’s and memory can then be added to this system as required.

As mentioned above, if the Apache Web Server is scaled out, then it is necessary to place the MySQL database on a separate system even if this is not required for load reasons. If this is not done then the MySQL database can remain on the same system as The Apache Web Server.

Docker Service¶

The specific hardware requirements of the system running the Docker service are describe here: Hardware Requirements. In this section we discuss the issues involved in scaling out the Docker service.

Depending in the usage patterns you will find it necessary to begin scale-out of the Docker service when the number of Users exceeds about 1000. In other words, a working estimate is that the Web Portal requires appropriately one Docker host per 1000 users.

A requirement for scale-out of the Docker system is software that manages a cluster of Docker hosts. There are a number of such tools available, including: Swarm, Shipyard, Google Kubernetes and CoreOS.

An important requirement of such systems is that they support the standard Docker API, which is used by the Web Portal. If this is the case, then the Web Portal will be able to start and manage containers in the cluster without regard to the number of hosts in the cluster.

The container storage used by a Docker cluster must be mounted by all hosts in the cluster. This means that the storage must be placed on a shared storage medium like an NFSv4 server or shared disk file systems like OCFS2 or GFS2. Note that concurrent access of the same volume is required, but not concurrent access to the files on the volume. in other words, file locking is not an issue.

With Web Portal version 1.0.10 the support for Docker Swarm is approved, but only for the legacy standalone Swarm setup (https://docs.docker.com/swarm/overview/), because of the different service model in the Docker Engine v1.12.0 using the swarm mode.

The DockerHost setting must be changed to the Swarm port (default 2377).

Upgrading the TeamDrive Web Portal¶

There are a number of aspects to upgrading the TeamDrive software used by the Web Portal: the Web Portal software, the structure of the MySQL database and the TeamDrive agent used by the Web Portal.

There is a dependency between two TeamDrive agent and the Web Portal

because the Web Portal services the Web application that makes calls

to the TeamDrive Agent. The Web Portal requires a MinimumAgentVersion

and will make sure that you are running the required version of the TeamDrive Agent.

Since the TeamDrive agent is always backwards compatible with the Web application, you are free to use a more recent version than required. How to upgrade the TeamDrive agent is described in the following section: Upgrading the Database Structure and Docker Container Image.

Upgrading the TeamDrive Web Portal by first downloading the updated repository:

[root@webportal ~]# wget -O /etc/yum.repos.d/td-webportal.repo \

http://repo.teamdrive.net/td-webportal.repo

and update the Web Portal packages using the RPM package manager:

[root@webportal ~]# yum update td-webportal yvva

An update simply replaces the existing packages while the service is

running, and the services (httpd and td-webportal)

are automatically restarted afterwards. After the packages are updated

proceed with the next section to update database structure and the

Docker Container Images.

Check the chapter Release Notes - Version 2.0 for the changes introduced in each new version. The release notes may also contain important notes that effect the upgrade itself.

Upgrading the Database Structure and Docker Container Image¶

The upgrade_now command described below performs two functions:

it upgrades the MySQL database structure, and the docker containers image

used by the Web Portal. Note that some error may occur in both the

Web Portal API and the Admin Console until this command has been

execute. As a result, it is recommended that this step is performed

immediately after the upgrade of the Web Portal software.

The Docker container image used is stored in the ContainerImage

setting and is set to the minimum required agent version by default

(see MinimumAgentVersion).

This means that the container image will automatically be updated

when you manually increase the ContainerImage or a newer version

of the Web Portal requires a newer MinimumAgentVersion.

The upgrade of a container image cannot occur “in-place”. Instead, the old container must be removed, and a new container started which uses the new image.

During normal operation, containers are only removed when they

are idle for a certain amount of time. This time is specified

by the RemoveIdleContainerTime setting.

This means that if a container is in continuous use, then it will never by upgraded.

For this reason, a number of settings have been added to “force”

upgrade of a container, even if the idle timeout is not exceeded.

The settings that perform this task are RemoveOldImages,

OldImageTimeout and OldImageRemovalTime.

RemoveOldImages must be set to True to enabled this

functionality.

Docker container images are available from the TeamDrive public Docker repository on the Docker hub. Here you will find a list of the tagged images that are available:

https://hub.docker.com/r/teamdrive/agent/tags/

You can install or update an image and upgrade the database structure,

start the yvva command line executable, and enter upgrade_now;;.

This command will firsts perform any necessary database changes and then automatically check the Docker hub for the newest published agent:

[root@webportal ~]# yvva

Welcome to yvva shell (version 1.5.2).

Enter "go" or end the line with ';;' to execute submitted code.

For a list of commands enter "help".

UPGRADE COMMANDS:

-----------------

To upgrade from the command line, execute:

yvva --call=upgrade_now --config-file="/etc/yvva.conf"

upgrade_now;;

Upgrade the database structure and Docker container image (this command cannot be undone).

and in case you are using a custom Docker container image, please follow the steps described in the chapter Creating a Customised Agent Docker Image. In case of upgrading from version 1.1 or below to a newer version, please follow the steps in Upgrading a custom installation from Version 1.1 to 1.2

Note

If outgoing requests has to use a proxy server, follow the Docker documentation https://docs.docker.com/engine/admin/systemd/#http-proxy to set a proxy for Docker. Restart the Docker service after adding the proxy configuration.

The successfull updated image will set the ContainerImage setting accordingly,

for example: teamdrive/agent:4.5.5.1838.

At this point the values of the settings OldImageTimeout

and OldImageRemovalTime will take effect.

OldImageTimeout is the time, in seconds, that a container with an old

image (an image other than ContainerImage) must be idle before it is

removed. Zero means the container is removed immediately, even if it is

running. Note, if RemoveOldImages is False, this setting is ignored.

OldImageRemovalTime specifies when containers with old images should be

removed. Set this setting to a specific time of day (e.g. 03:00, format:

hh:mm) or to a specific date (format YYYY-MM-DD hh:mm). This specifies

the time when the upgrade will take place, which will force a running

container to be removed and re-created.

If you want to force upgrade immediately, set this setting to “now”. You

can disable this setting by setting it to “never”. In this case,

upgrade is controlled by the OldImageRemovalTime setting.

You will find more on the upgrade process in the description of the tasks that actually perform this functions, see Background Tasks Performed by td-webportal.

Move docker storage layer to external volume¶

The user data for the TeamDrive agent containers is located in /teamdrive and this will be the largest part of the necessary storage for hosting the Web-Portal.

Docker itself needs own storage for the container stogare layer as described in the docker documentation:

https://docs.docker.com/storage/storagedriver/

By default docker is using the overlay2 storage driver for all data which is

stored in /var/lib/docker.

If necessary, the docker storage layer can be moved to a separate volume. How to setup this volume is described in the docker documentation:

Upgrading a custom installation from Version 1.1 to 1.2¶

Note

This step is only necessary when updating from a version 1.1 or below

to version 1.2 (or later) to define your Build Image settings. Once you set

your build settings, the update process is identical to the normal

update process with just executing upgrade_now;; in the yvva command line.

The “White Label” GUI Web Portal RPM is no longer necessary and the existing package must be removed. Search for the old installed packages:

[root@webportal ~]# rpm -qa | grep "webportal-clientui"

and remove all listed packages using:

[root@webportal ~]# rpm -e <full package name>

Now download the updated repository:

[root@webportal ~]# wget -O /etc/yum.repos.d/td-webportal.repo \

http://repo.teamdrive.net/td-webportal.repo

and update the Web Portal packages using the RPM package manager:

[root@webportal ~]# yum update td-webportal yvva

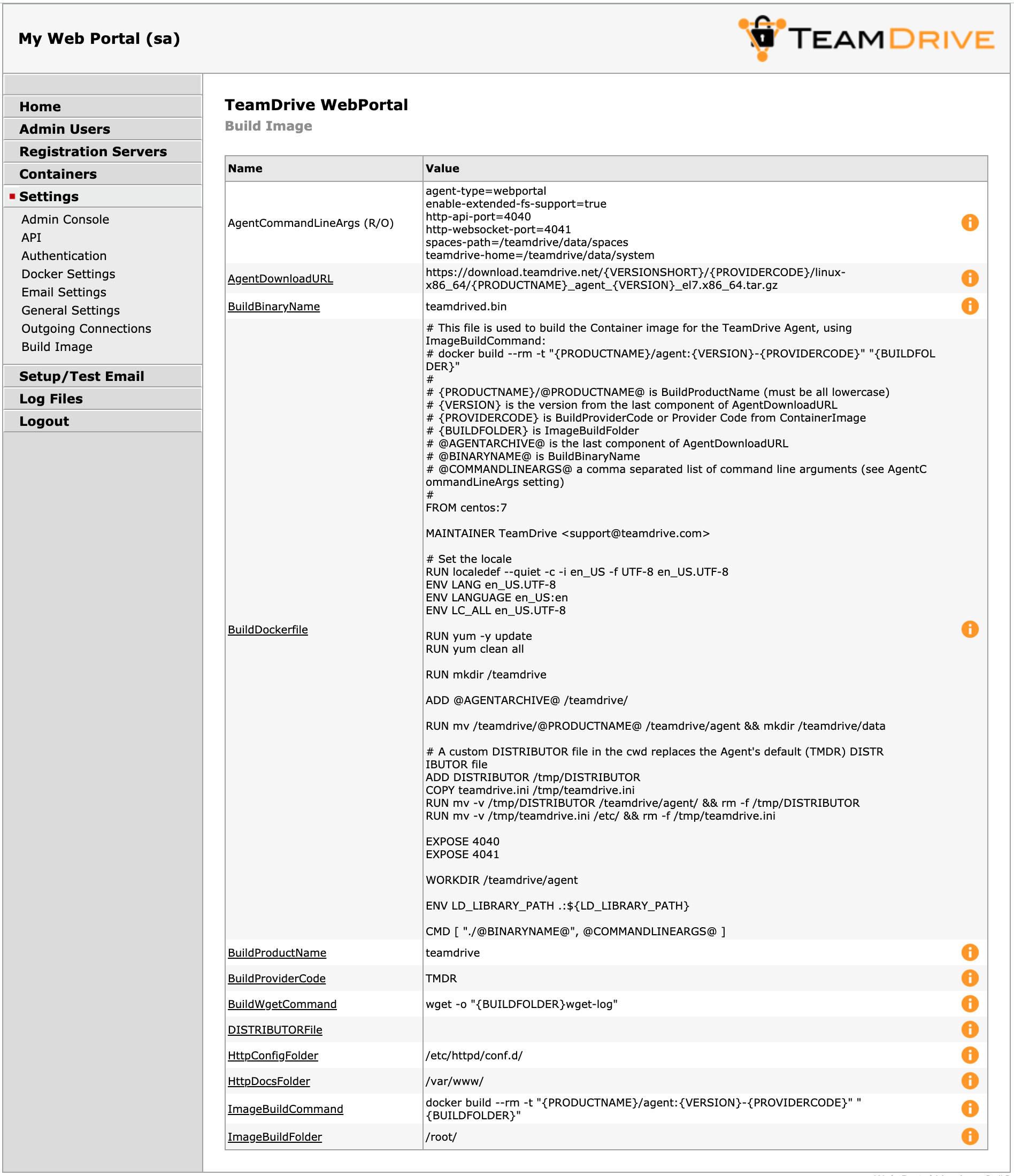

The Web Portal version 1.2 or later is capable of building a custom Docker image automatically. The description below assumes you are using a customised version of the TeamDrive Agent executable, or the Web-GUI.

Use the yvva command line (see below) or the Web Admin to fill in your

Build Image product information.

Web Portal Admin Console: White label settings

The following required values are necessary to build a customised Docker image:

BuildBinaryName: The binary name of the linux agent ending with.bin.BuildProductName: The first part of the Agent archive (the tar.gz file). This value should be all lower-case. If not, please contact TeamDrive support.BuildProviderCode: Your 4 letter Provider code.

In addition to set these values, it may be necessary to modify the following settings:

AgentDownloadURL: By dafault this is the link to the TeamDrive download portal:http://s3download.teamdrive.net/{VERSIONSHORT}/{PROVIDERCODE}/linux-x86_64/{PRODUCTNAME}_agent_{VERSION}_el7.x86_64.tar.gz

See AgentDownloadURL for a detailed description of this value. If your Agent archive is not located on the TeamDrive portal, then you should set the value accordingly.

The {VERSION} placeholder will be replaced by the highest version specified by the

ContainerImageandMinimumAgentVersionsettings.DISTRIBUTORFile: The content for the DISTRIBUTOR file for the agent. If left empty the DISTRIBUTOR file from the Agent archive (.tar.gz file) will be used.

To set your build settings using the yvva command line: Start yvva as root user

and replace the following placeholders <your-....> with your values:

AppSetting:setSetting("BuildBinaryName", "<your-binary-name>");;

AppSetting:setSetting("BuildProductName", "<your-product-name>");;

AppSetting:setSetting("BuildProviderCode", "<your-provider-code>");;

to verify your values execute:

print AppSetting:getSettingAsString("BuildBinaryName");;

print AppSetting:getSettingAsString("BuildProductName");;

print AppSetting:getSettingAsString("BuildProviderCode");;

The optional parameters, AgentDownloadURL and DISTRIBUTORFile

can be a set in a similar manner (line breaks are permitted in strings).

However, it is easier to change settings like DISTRIBUTORFile in the

Web Admin.

After these values have been set correctly, you can build a new Docker images by

starting the yvva command line, and running the following command:

upgrade_now;;

The Web Portal will try to download the required Agent archive version and build the new Docker image. The single steps will be logged to the console and will also show error messages if a step fails.

In case that the download fails or if you want to skip the download step, place your

Agent archive in the ImageBuildFolder and the update process will

use it to create the Docker image. The image will be used to retrieve and update the

Web-GUI as required.

More information on the process is provided in the section Creating a Customised Agent Docker Image.